Amazon Simple Storage Service (S3)

Amazon S3 (Simple Storage Service) is a global service that allows users to store and retrieve any amount of data, including files, backups, and media.

S3 is commonly used for data archiving, content delivery, and disaster recovery, and offers features like versioning, encryption, and access control for enhanced security and management.

AWS docs

Event Notifications

S3 sends notifications for the following events:

AWS docs

- New object creation

- Object removal

- Object restoration

- Reduced Redundancy Storage object loss

- Replication

- S3 Lifecycle expiration and transition

- S3 Intelligent-Tiering automatic archival

- Object tagging

- Object ACL updates (PUT)

Mount Point

Mount an S3 bucket to the local file system using a file client for Linux.

Not compatible with the following storage classes:

AWS docs

- S3 Glacier Flexible Retrieval

- S3 Glacier Deep Archive

- S3 Intelligent-Tiering Archive Access Tier

- S3 Intelligent-Tiering Deep Archive Access Tier

Multi-region access point

Main features:

AWS docs

- Automatic load balancing between buckets.

- Provides failover mechanism.

- Traffic remains within the AWS network.

- Two resource policies must permit access: the Access Point policy and the bucket policy.

- S3 does not replicate objects automatically. To enable replication, configure replication.

Object Lambda Access Point

You can place a Lambda function in front of the bucket, which modifies the returned data based on the configuration in the function.

Request methods to modify responses:

AWS docs

- GET - Filter rows, dynamically resize images, etc.

- LIST - Create a custom view of objects.

- HEAD - Modify object metadata.

VPC endpoints

To access an S3 bucket from a private VPC, VPC endpoints can be provisioned. This ensures that traffic to and from the S3 bucket remains within the AWS network.

There are two types of VPC endpoints: Gateway and Interface.

Gateway endpoint:

Interface endpoint:

AWS docs

There are two types of VPC endpoints: Gateway and Interface.

Gateway endpoint:

- Uses Amazon S3 public IP addresses

- Uses the same Amazon S3 DNS names

- Does not allow access from on-premises

- Does not allow access from another AWS Region

- No additional cost

Interface endpoint:

- Uses private IP addresses from your VPC to access Amazon S3

- Requires endpoint-specific Amazon S3 DNS names

- Allows access from on-premises

- Allows access from a VPC in another AWS Region via VPC peering or AWS Transit Gateway

- Additional charges apply

In both cases, traffic remains within the AWS network.

Presigned URLs

Time-limited access to objects — from 1 minute to 12 hours (using AWS console) or up to 7 days (using CLI, SDKs). Allows upload or download without providing AWS security credentials.

To create a presigned URL, you must provide:- Amazon S3 bucket

- Object key (for download or upload)

- HTTP method - GET or PUT

- An expiration time interval

Access point

Use an Access Point to enforce an access policy applied on top of the S3 bucket resource policy.

Additionally, you can configure the Access Point to only accept requests from a VPC and/or block public access at the Access Point level.

Limitations:

AWS docs

Limitations:

- You can only perform object operations with Access Points (you cannot delete or modify the bucket).

- Access Point names must meet certain conditions (e.g., comply with DNS naming restrictions).

Lifecycle

Automatically change the storage class and expire items.

To manage the lifecycle, create an S3 Lifecycle configuration, which consists of a set of rules that define actions.

There are 2 types of actions:

AWS docs

There are 2 types of actions:

- Transition action - move objects between storage classes.

- Expiration action - delete objects.

Object Lock

S3 Object Lock can help prevent Amazon S3 objects from being deleted or overwritten (WORM model - write-once-read-many) for a fixed amount of time or indefinitely. It can be applied to the whole bucket or to a specific object.

There are two ways to manage objects:

AWS docs

There are two ways to manage objects:

- Retention period – a fixed period of time during which an object remains locked.

- Legal hold – has no expiration date, and the hold remains in place until you explicitly remove it.

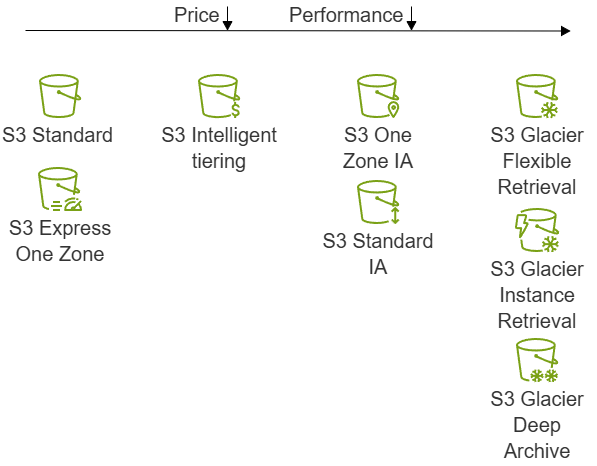

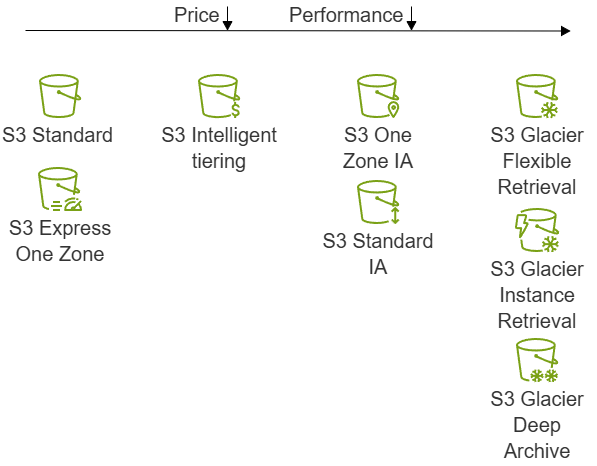

Storage Classes

Each object in S3 associated with storage class. Control how much to spend by choosing the right storage class.

AWS docs

- High Performance (single-digit millisecond data access):

- S3 Standard (default)

- S3 Express One Zone (the lowest latency)

- Reduced Redundancy Storage (not recommended to use)

- S3 Intelligent-Tiering (automatically move data to the most cost-effective tier):

- Frequent Access (default)

- Infrequent Access (objects not accessed in 30 consecutive days)

- Archive Instant Access (objects no accessed in 90 consecutive days)

- Archive Access (optional tier)

- Deep Archive Access (optional tier)

- Infrequently Accessed (millisecond access, charges retrieval fee):

- S3 Standard-IA (multiple AZs)

- S3 One Zone-IA

- Lowest Cost:

- S3 Glacier Instant Retrieval (miliseconds retrieval)

- S3 Glacier Flexible Retrieval (minutes retrieval)

- S3 Glacier Deep Archive (no real-time access)

Batch Operations

Perform operations on multiple objects with one request and track progress:

AWS docs

- Copy objects

- Invoke Lambda functions

- Replace tags

- Delete tags

- Replace ACLs

- Restore objects

Replication

You can use replication to enable automatic, asynchronous copying of objects across Amazon S3 buckets.

Types of replication:

- Live - automatically replicates objects uploaded to the bucket

- On-demand - replicates existing objects

- Versioning must be enabled for both the source and destination buckets.

- The source and destination buckets must be in different regions.

- S3 must have permissions to replicate objects from the source to the destination bucket.

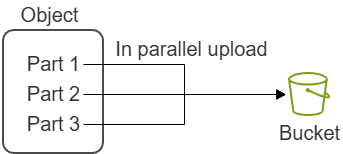

Multipart upload

Upload objects up to 5 TB with several threads using CLI, SDK, or REST API. Consider using it when an object is larger than 100 MB.

Advantages:- Improved throughput.

- Quick recovery from any network issues.

- Pause and resume object uploads.

- Begin an upload before you know the final object size.

Bucket types

General purpose:

AWS docs

- Recommended for most use cases.

- All storage classes except for S3 Express One Zone.

- Organized hierarchically into directories (opposed to flat structure of general purpose buckets)

- S3 Express One Zone and S3 One Zone-Infrequent Access storage classes.

Versioning

- Each object key points to the current version of the object.

- Uploading an object with the same key creates a new version, which becomes the current version.

- If an object is deleted without specifying the version ID, a new version (delete marker) is created. However, all previous object versions are retained unless explicitly deleted.

- Helps you recover objects from overwrite or accidental deletiong.

Transfer acceleration

Fast, easy, and secure file transfers over long distances between your client and an S3 bucket. Leverages AWS CloudFront's globally distributed edge locations to route data to S3 over an optimized network.

Additional data transfer charges may apply.

AWS docs

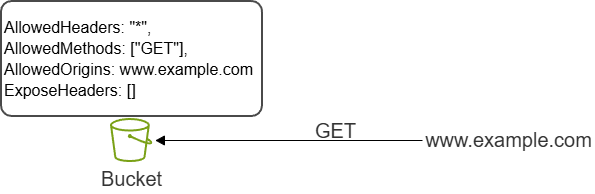

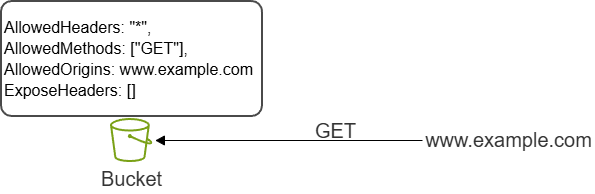

CORS

Cross-Origin Resource Sharing (CORS) defines a way for client web applications loaded in one domain to interact with resources in a different domain.

CORS configuration:

AWS docs

- AllowedHeaders - Specifies which headers are allowed in a preflight request through the

Access-Control-Request-Headersheader. - AllowedMethods - Specifies the HTTP methods that are allowed, such as GET, PUT, POST, DELETE, and HEAD.

- AllowedOrigins - Specifies the origins that are allowed to make cross-domain requests.

- ExposeHeaders - Identifies response headers that you want customers to access from their applications.

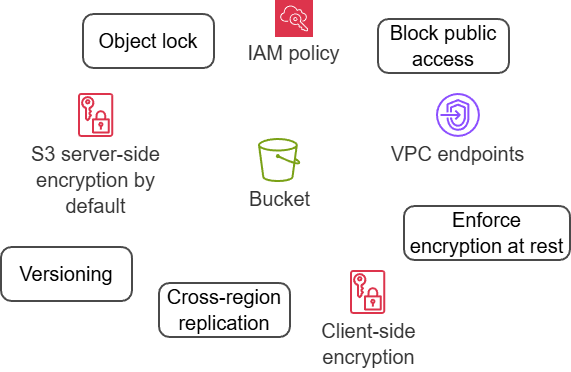

Security

Ways to protect data in S3:

AWS docs

- S3 block public access

- IAM roles and resource-based policies

- S3 access points - network endpoints to share data

- ACLs (not recommended by AWS) and object ownership

- Client-side encryption

Server-side encryption

Depending on how much you choose to manage encryption keys, you have 3 options:

AWS docs

- Amazon S3 managed keys - SSE-S3 (you don't manage keys, default option).

- AWS Key Management Service - AWS KMS (you can view separate keys, edit control policies and follow the keys in AWS CloudTrail).

- Customer-provided keys - SSE-C (you manage the keys, S3 Encrypt and Decrypt data).

Client-side encryption

Your objects aren't exposed to any third party, including AWS. If you lose key, you lose the data.

Enforcing encryption

By default all objects uploaded to S3 encrypted with Amazon S3 managed keys (SSE-S3). S3 manages the keys, rotate them, you don't see or manage them.

When uploading the object you can change how the object is encrypted - SSE-KMS (use KMS service), SSE-C (provide your own key). You control the encryption by providing headers in the request.

AWS docs